In our previous post, we explored how product owners use Spec-Driven Development to create comprehensive specifications with AI assistance. Now let's dive into the developer side of the workflow - where we transform those specs into real code.

When we left off, our spec was complete and clarified. Now it's the development team's turn to bring it to life.

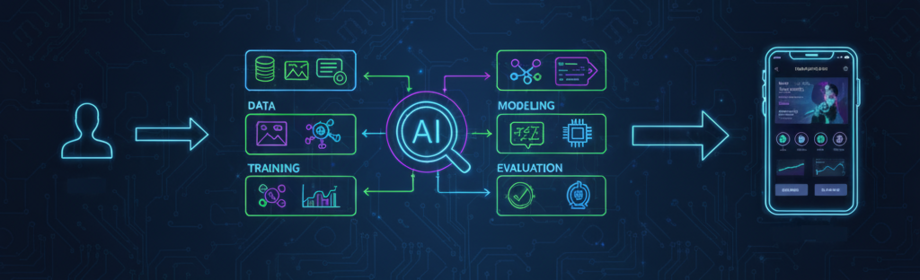

Sequence Diagram of Spec Kit

Before we continue the flow I just want to share a sequence diagram to help us visualize the whole process we are following:

Creating the Implementation Plan

The first step for developers is generating a technical plan:

/speckit.plan

This is where we specify our technical approach. There are countless ways to solve any problem, and developers are notoriously opinionated about tools and patterns.

For our simple change (removing a navigation link), I let the AI figure out the approach without input. However, on more complex features, you should always provide guidance to /speckit.plan about architectural decisions, libraries, patterns, or constraints.

After a couple of minutes, Claude generated four new files:

plan.mdresearch.mddata-model.mdquickstart.md

At this point, we're roughly 800 lines of markdown into the process. Let's keep going.

Breaking Down the Work into Tasks

Next, we generate actionable tasks:

/speckit.tasks

This created a new specs/001-remove-the-team/tasks.md file. As a developer, this file is fascinating. For our extremely simple feature, the AI generated 23 tasks. Some were spot-on:

- [ ] T001 [P] [US1] Verify header template contains Team link at `app/views/layouts/_header.html.haml:10`

Promising! The AI identified the exact file and line number it needs to change.

There were also comprehensive testing tasks:

- [ ] T004 [US1] Create feature spec file at `spec/features/visitor_views_navigation_spec.rb`

And manual verification steps:

**Manual Verification** ⚠️ Requires Human Review:

- [ ] T016 [P] [US1] Manual verification: Start server and verify header on homepage (desktop viewport)

This balance between automated and manual checks seems good.

Optional: Analyzing the Implementation Plan

Before implementation, you can optionally run:

/speckit.analyze

This step analyzes the spec and tasks, providing metrics on risk, coverage, and implementation confidence. It's quite interesting for complex features, but I skipped it for this simple example.

The Main Event: Implementation

Time to let the AI write code:

/speckit.implement

This is the longest step by far. The AI agent worked through all 23 tasks one by one, modifying code and test files. It took approximately 15 minutes, though the AI paused frequently to confirm actions with me (and I was context-switching to other work, so take that estimate with a grain of salt).

Execution time varies wildly based on codebase size, programming language, complexity, and change scope. Your mileage will vary.

At completion, the implementation step created additional files:

IMPLEMENTATION_SUMMARY.mdMANUAL_VERIFICATION.mdverify_implementation.sh

That shell script is particularly valuable. Developers typically review AI-generated changes and rework as needed - this script lets you re-verify quickly after adjustments.

Verifying the Implementation

Here's what happened when I ran the verification script:

./specs/001-remove-the-team/verify_implementation.sh

# ==========================================

# Implementation Verification Script

# Feature: Remove Team Navigation from Header

# ==========================================

#

# 1. Checking file modifications...

# -------------------------------------------

# ✓ PASS: Header template does NOT contain Team link

# ✓ PASS: Footer template contains Team link

# ✓ PASS: Navigation feature spec exists

#

# 2. Checking routes...

# -------------------------------------------

# ✓ PASS: Team route exists in routes.rb

#

# 3. Running navigation feature specs...

# -------------------------------------------

# ✓ PASS: All navigation feature specs passed

#

# 4. Checking test coverage...

# -------------------------------------------

# ✓ PASS: Adequate test coverage (11 scenarios)

#

# 5. Verifying acceptance criteria...

# -------------------------------------------

# SC-001: Header shows 4-5 navigation links (excluding Team)

# SC-002: Footer Team link accessible on all pages

# SC-003: Team page URLs continue to function

# SC-004: Header layout renders correctly

#

# ⚠ Acceptance criteria verified by automated tests above

# ⚠ Visual verification requires manual testing

#

# ==========================================

# Verification Summary

# ==========================================

# Total Checks: 6

# Passed: 6

# Failed: 0

#

# ✓ All automated verifications PASSED

#

# Next Steps:

# 1. Review MANUAL_VERIFICATION.md for manual testing tasks

# 2. Start Rails server: rails server

# 3. Perform visual verification in browser

# 4. Complete T016-T023 manual verification tasks

Notice the AI didn't run the entire test suite - it nicely scoped tests to what's relevant. This is perfect for rapid iteration on a single feature.

Of course, before committing and pushing, always run your complete test suite, linters, formatters, and any other quality gates your project uses.

The Final Step: Manual Verification

The AI generated a detailed MANUAL_VERIFICATION.md with step-by-step instructions for human verification. This serves two audiences:

- Developers: To confirm the implementation matches the spec, and we didn't break UI, etc

- Product/QA teams: To accept the feature as complete before deployment

This human-in-the-loop approach is smart. AI can write code and tests, but visual verification and user experience validation still require human judgment.

Is Spec-Driven Development Worth It?

For this trivial change (removing one link), Spec-Driven Development generated hundreds of lines of documentation and 23 tasks for what could've been a 30-second edit.

Overkill? Maybe.

But on complex features - especially those requiring coordination between product, design, and engineering - this approach shines. It:

- Forces clarity upfront

- Surfaces ambiguities early

- Creates audit trails

- Automates tedious verification

- Reduces miscommunication

The key is knowing when to use it. For exploratory work or tiny tweaks, it's probably overkill. For substantial features with business impact, it could save your team days of meetings and rework.

Want to learn more? Check out GitHub's excellent guide on Spec-Driven Development.

Build Better Software with Expert Help

At Hashrocket, we've spent over a decade helping companies ship exceptional web and mobile applications. We're experts in Elixir, Phoenix, Ruby on Rails, React, React Native, and the latest development methodologies - including emerging approaches like Spec-Driven Development.

Whether you need a team to build your product from scratch, augment your existing developers, or provide technical guidance on modern workflows, we're here to help. We believe in pragmatic engineering, clean architecture, and shipping software that makes a real impact.

Ready to build something amazing? Get in touch with us - we'd love to hear about your project.